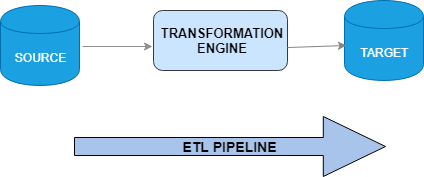

ETL PipelineETL pipeline refers to a set of processes which extract the data from an input source, transform the data and loading into an output destination such as datamart, database and data warehouse for analysis, reporting and data synchronization.

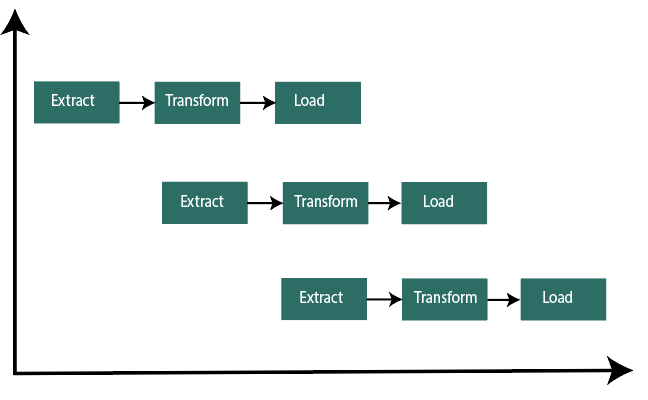

ETL stands for Extract, Transform, and load. Extract In this stage, data is extracted from various heterogeneous sources such as business systems, marketing tools, sensor data, APIs, and transaction databases. Transform The second step is to transform the data into a format that is used by different applications. In this stage, we change the data from the format where the data was stored in the format used in the different applications. After successful extraction of the data, we will convert the data into a form which is used for standardized processing. There are various tools used in the ETL process, such as Data Stage, Informatica, or SQL Server Integration Services. Load This is the final phase of the ETL process. Here, the information is available in consistent format. Now we can obtain any specific piece of data and can compare it to another part of data. Data Warehouse can either be automatically updated or manually triggered. These steps are performed between warehouses based on the requirements. Data is temporarily stored in at least one set of staging table as part of the process. However, the data pipeline will not end when the data is loaded to the database or data warehouse. ETL is currently growing so that it can support integration across the transactional systems, operational data stores, MDM hubs, Cloud and Hadoop platform. The process of data transformation is become more complicated because of the growth in the unstructured data. For example, modern data processes include real-time data such as web analytics data from extensive e-commerce website. Hadoop is synonym with big data. Several Hadoop-based tools are developed to handle the different aspects of the ETL process. The tools we can use depend on how the data is structured, in batches or if we are dealing with streams of data. Difference between ETL Pipeline and Data PipelineAlthough the ETL pipeline and data pipeline pretty much do the same activity. They move the data across platforms and transforming it in the way. The main difference is in the application for which the pipeline is being built. ETL PipelinesETL pipeline is built for data warehouse application, including enterprise data warehouse as well as subject-specific data marts. ETL pipeline is also used for data migration solution when the new application is replacing traditional applications. ETL pipelines are generally built by using industry-standard ETL tools that are proficient in transforming the structured data.

Data pipelines or business intelligence engineers build ETL Pipelines. Data PipelinesData Pipelines can be built for any application that uses data to bring value. It can be used for integrating the data across applications, build the data-driven web products, build the predictive models, create real-time data streaming applications, carrying out the data mining activities, building the data-driven features in digital products. The use of the data pipeline is increased in the last decade with the availability of the open-source big data technology, which is used to build data pipelines. These technologies are capable of transforming the unstructured as well as structured data. Data engineers build data Pipelines. Differences between the ETL Pipeline and Data Pipeline are:

Next TopicETL Files

|

For Videos Join Our Youtube Channel: Join Now

For Videos Join Our Youtube Channel: Join Now

Feedback

- Send your Feedback to [email protected]

Help Others, Please Share